Remote Access Windows Computing (Terminal Servers)

CSDE maintains Microsoft Windows Servers for general use computing through remote access. These permit anyone with a CSDE Windows Network account to sign into our file servers, access datasets, and run statistical software from anywhere in the world on a familiar Windows desktop environment.

If you don’t yet have a CSDE Computing Account, please request one here.

The Terminal Servers are rebooted every Thursday night/Friday morning from 3:00 AM to 5:30 AM PST while we apply updates. They will not be available during this time.

See here for a complete tutorial on accessing the CSDE Windows terminal servers, including links for download and use of Husky OnNet.

There are currently four general-purpose CSDE Windows terminal servers available:

For student users only:

- csde-ts4.csde.washington.edu

- csde-ts5.csde.washington.edu

For faculty/staff/off-site users:

- csde-ts1.csde.washington.edu

- csde-ts2.csde.washington.edu

Using servers csde-TS4.csde.washington.edu and csde-TS5.csde.washington.edu is slightly different than using servers TS1/2…

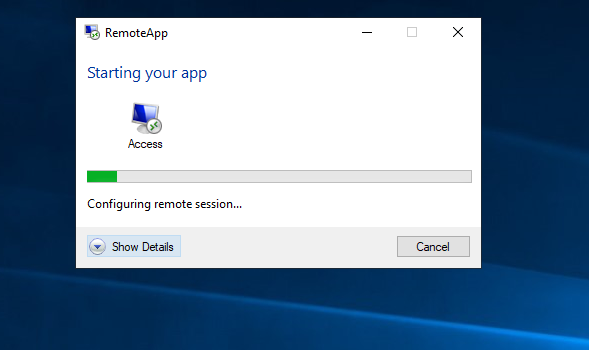

All available applications can be found by opening the folder “Applications” on the desktop. To launch an application, simply click on the shortcut icon (e.g. SPSS 23). After clicking the shortcut icon, you will see a little pop-up window like like the following image.

At the same time, a small window will also appear at the lower right-hand corner of the desktop windows

After a couple of minutes, you should be able to see the application window opens on the screen.

**** Please notice that the first time you click and launch an application, it may take a bit longer. Please be patient, the time to open will improve after that. If an application is not launched after you double click, please double click it again to relaunch it. Another trick is to click on another application shortcut which will speed up the appearance of the program. ****

You can also launch an application by clicking the “Start” button at the lower left-hand corner and look for the application on the list of Programs.

**** If your program is stuck or frozen, you can terminate it by using Task Manager. To do that click “Start” at the lower left-hand corner and look for the application “Task Manager (remote)” to launch. When Task Manager window is open, search the frozen application under the “User” tab to end the task. ****

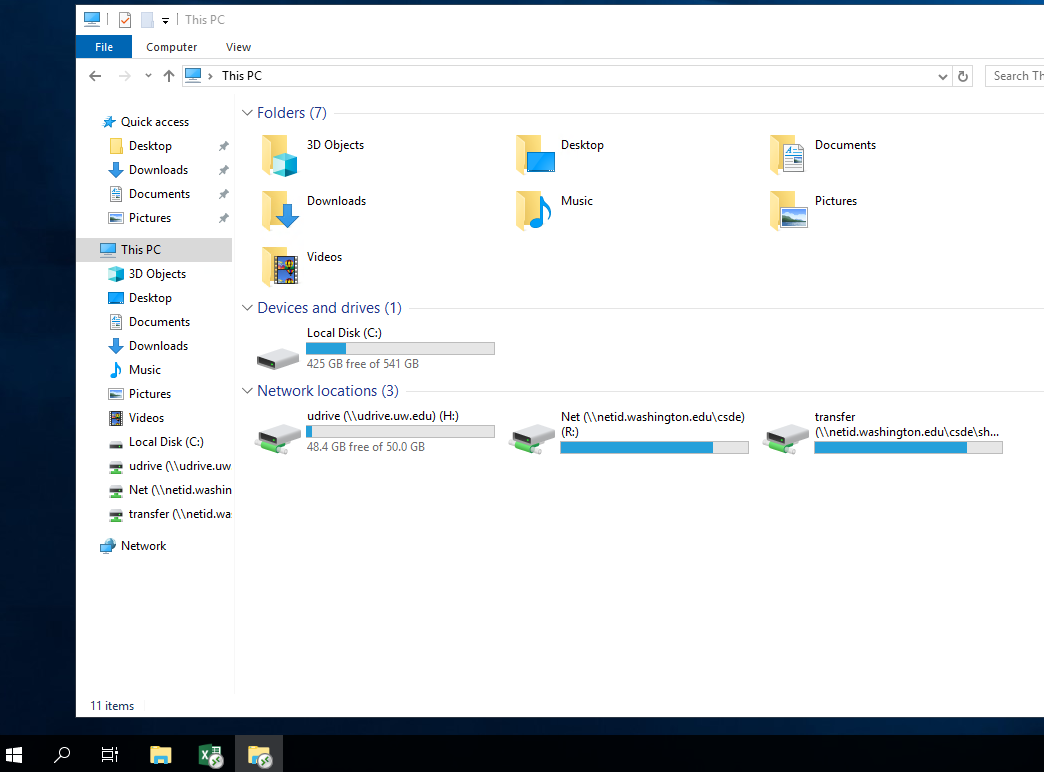

To look for files in your H: R: and T: drives, you must first click to open the shortcut icon “This PC” on the desktop of your remote desktop session window. Please be aware that this is the best possible way to locate your files.

To avoid any unnecessary loss of data, please make sure to save your work frequently and do not leave work unsaved if you disconnect from the remote desktop server.

Users should always save their files on their H: drive which is the same as their UW UDrive. The server is setup to redirect Documents folder, Downloads folder, as well as the Desktop to be included in the user’s UW UDrive.

The following image shows what you will see when you click on the “This PC” shortcut icon. Notice that the icons on the taskbar look slightly different than normal icons since they are programs running on a remote server.

These servers carry a full complement of ready-to-use statistical and social-sciences software packages. The terminal servers have nearly identical software packages installed and provide access to all your personal and project files. All our servers are Dell R6525 PowerEdge servers running Microsoft Windows Server 2022 Operating System. Each has Dual 48-core AMD 7413 processors with more than 1TB of system memory.

Please send your questions to csde_help@uw.edu

There are five drives available for use on CSDE terminal servers. Once logged onto a terminal server, they can be found in the My Computer menu.

| Windows Drive Letter | Use for | Backed Up | Private | Shareable with others |

| H: | The H: drive is your home directory, where personal files can be saved. It can only be accessed by you and is backed up regularly. |

|

|

|

| O: | The O: drive is for CSDE administrative documents and is only available to CSDE personnel. |

|

|

|

| R: | The R: drive is for storage of project and data sets. Multiple users working on the same project can have read/write access to files/folders. |

|

|

|

| T: | The T: drive is used for temporary storage and file sharing. T: is not backed up, so save copies elsewhere. |

|

||

| U: | The U: drive is used for personal file storage. It can only be accessed by you and is backed up regularly. It can be self-service restored following these UW-IT directions

The U: drive is the same as your UW UDrive. |

|

|

Be careful not to permanently store data on the desktop, in the C: drive, or in the Downloads folder. These locations are regularly cleared of files to free up space, so any files lost may be impossible to recover.

Normally, one has to be on campus to view the electronic resources offered by UW Libraries. However, CSDE allows remote access through CSDE’s “Windows Terminal Server,” which all CSDE Affiliates are invited to use. Once you are logged into the server, it allows you to search the UW Libraries systems and use their resources as if you were on campus.

To find a journal article in the UW database, follow the steps below.

- Sign into the terminal server using your CSDE account

- Open a web browser and go to www.lib.washington.edu/

- Enter the journal title into the search box at “UW WorldCat: Search UW Libraries and beyond” on the library homepage

- Journal availability information will be displayed. Use whichever database you would like. You can then search the database for your articles by title/author/keyword/issue/etc.

Simulation Cluster

CSDE’s Simulation Cluster is a group of 2 Dell C6320 PowerEdge Windows Server 2022 servers featuring simulation-specific software intended for computationally intensive work. The Sim Cluster is available for use by CSDE affiliates and students from all CSDE training program partner departments.

To start using the the Simulation Clusters, you must first submit a request to gain access.

The Sim Cluster is rebooted on the last Friday of each month and is therefore offline from 3:00 AM to 10:00 AM PST while we apply updates. Please plan your work accordingly.

- All off-campus users must connect their local system to the Husky On-Net VPN.

Faculty, staff, and students, see: Set up Husky On-Net VPN.

Non-UW members, see: Connecting to CSDE’s HON-D Departmental VPN - Connect to any of the Sim Cluster nodes (e.g., sim3.csde.washington.edu) using Remote Desktop Connection.

- Log in with your NetID username and password.

To start a new session for an additional simulation job, simply open another Remote Desktop Connection window to a different sim node (e.g., sim5.csde.washington.edu).

To resume a job that’s currently running, reconnect to it by logging back in directly to its node. (The My Computer icon on your session’s desktop will indicate which cluster node you’re using; see the image to the right.) For example, if you disconnect from SIM3, your session would continue running there. To reenter and check the status of jobs, you need to use Remote Desktop Connection to connect back to the Sim node with your job.

To resume a job that’s currently running, reconnect to it by logging back in directly to its node. (The My Computer icon on your session’s desktop will indicate which cluster node you’re using; see the image to the right.) For example, if you disconnect from SIM3, your session would continue running there. To reenter and check the status of jobs, you need to use Remote Desktop Connection to connect back to the Sim node with your job.

- List of programs available on CSDE Sim cluster

- Each cluster node is a Dell PowerEdge M640 or C6320 Blade server with 16 2.00GHz Intel Xeon CPUs and 384GB or more RAM. They run Windows Server 2019 (64-bit) Standard Edition.

- Information on the H: and R: drives is backed up to tape, but like the Terminal servers, files on the Desktop and C: drive of the Sim Cluster are not backed up. The Desktop and C: drive are wiped whenever the sim nodes are re-imaged, so make sure you store important data elsewhere. Though we will do our best to minimize disruptions, CSDE is not responsible for data lost due to maintenance reboots or unexpected crashes.

- You are expected to compose a brief note and endorsement for future funding proposals.

- Do not overload the cluster with too many computationally intensive jobs—it prevents others from using the resource.

- If running multiple jobs, start them on several nodes rather than on a single one. Please do not run jobs on more than three sim nodes.

- Report any problems with the sim nodes or software to csde_help@uw.edu.

Unix Systems

Unix systems are characterized by various concepts: the use of plain text for storing data, a hierarchical file system, treatment of devices and certain types of inter-process communication (IPC) as files, and the use of a large number of small programs that can be strung together through a command line interpreter using pipes (as opposed to using a single monolithic program that includes all of the same functionality).

Getting an Account

You must have a CSDE Unix account to use the CSDE Linux systems. The following people qualify for Unix accounts at CSDE:

- Current faculty affiliates

- Current UW students who contribute to the Student Technology Fee

- Off-campus population scientists sponsored by a CSDE faculty affiliate

The parties above may request an account by completing this form.

Getting a UWNetID

If you’re an offsite or new UW member without a UWNETID, or wish to sponsor a UWNetID for a visiting researcher, click here

If you already have a UWNETID, manage your UW services at the UWNetID management pages here.

All CSDE Unix systems allow incoming connections exclusively via Secure Shell (SSH) v2.

Connect to Husky OnNet VPN

All off-campus users must connect their local system to the Husky On-Net VPN before attempting a ssh or Rstudio connection to Linux systems such as Nori, Libra, and Union.

-

- Faculty, staff, and students, see:

-

- Non-UW members, see:

Connecting to CSDE’s HON-D Departmental VPN

From Windows

- Several free Secure Shell 2 clients are available for windows:PuTTY and

mRemoteNG

UW Device licensed SecureCRT - Directions for connecting via a graphical desktop are on the tutorial section Using X2Go

From Unix

- This is accomplished via the command line Secure Shell (SSH) client.

- Syntax: ssh <user@hostname>

- Example: “ssh dth2@nori.csde.washington.edu”

CSDE Unix Hosts

The following systems are available to all CSDE Unix Account holders

| Host: | Model Name: | cpu MHz: | CPU Cores: | MemTotal: | SwapTotal: |

| nori.csde.washington.edu | Dell PowerEdge R630 | 2.53 Ghz | 16 | 500 Gig | 10 Gig |

| libra.csde.washington.edu | Dell PowerEdge R630 | 2.53 Ghz | 16 | 500 Gig | 10 Gig |

| union.csde.washington.edu | Dell PowerEdge R630 | 2.53 Ghz | 16 | 500 Gig | 10 Gig |

RStudio Workbench Professional

To access RStudio Workbench Professional via a web browser you must have an active CSDE Unix account. Once established, you may use a browser such as Chrome, Safari, or Firefox and simply open up your browser and navigate to any of the systems below. For more information see: Rstudio Server web site

- https://union.csde.washington.edu:8787

- https://libra.csde.washington.edu:8787

- https://nori.csde.washington.edu:8787

Note that you should only have one instance of Rstudio Workbench running on any given system at a time.

You can not run simultaneous sessions of RStudio Workbench Professional on two or more servers simultaneously.

If you need to run multiple R jobs at the same time on more than one of these systems, use the linux command line to run “R”

You may also use one or more Hyak nodes to do this as well.

More information and instructions can be found for Unix programs, such as X2Go, on our “Connecting to Unix Terminal Servers” section of our tutorials page.

CSDE offers Ubuntu 22.04 Linux servers with software specialized for demographic research and statistics. The machines are available to all CSDE Unix account holders.

General-Access Unix Systems

Everyone with a CSDE Unix account may access union.csde.washington.edu and libra.csde.washington.edu. Both have the following system specifications:

- 64-bit, x86-64, 6.x kernel

- Ubuntu 22.04 (Jammy)

- X2Go capable

- Model: Dell PowerEdge R630

- CPU: 2x Intel(R) Xeon(R) CPU E5-2630L v3 @ 1.80GHz

- Total CPU Cores: 32 with Hyperthreading

- Memory Total: 520 GB

- Swap Total: 20 GB

The following software is available on the General-Accesss (GA) CSDE Linux Servers:

High-performance computing is handled by the CSDE pool in Hyak, the UW-Wide High Performance Computing cluster. All Hyak access is authenticated by UW NetID and requires two-factor authentication via “DUO”. If you are a UW student, you can also join the UW HPC club and access the larger STF Hyak pool. (You can still connect from the CSDE Unix systems to Hyak if you use this allocation pool.)

To use the CSDE Hyak nodes (Klone is latest generation, as of 4/2021), all of the following are required:

- CSDE Computing admins must add your UW NetID to the group “u_hyak_csde” to enable access. Request Hyak access from csde_help@u.washington.edu.

- You need to have DUO two-factor enrollment enabled on your UWNETID. CSDE Help must do this on your behalf if you are not a current UW member. If you are a current UW member you can enroll your device yourself

- You must enroll a compatible two factor device in the UW Duo system.

- You must add the Hyak server and the Lolo server (storage system) to your UW NetID self-services pages. To do this, click here, click “Computing Services,” check the the “Hyak Server” and “Lolo Server” boxes in the “Inactive Services” section, click “Subscribe” at the bottom of the page, and click “Finish.” After subscribing, it may take up to an hour to be fully provisioned.

Connecting to Hyak

You’ll need to SSH into Hyak using your UW NetID username and password. It will ask you to approve using DUO 2-factor. Depending on which cluster you want, use the hostname:

- ikt.hyak.uw.edu RETIRED June 2020

- Mox.hyak.uw.edu 2018 version of cluster

- Klone.hyak.uw.edu 2021 version of cluster

For example, ssh UWNetID@mox.hyak.uw.edu, substituting your user name in place of “UWNetID.” Please use the /gscratch/csde area and create a subdirectory there named with your UW NetID. The lolo collaboration file system is located at /lolo/collaboration/hyak/csde/.

Please subscribe to this list for Hyak status updates.

The basic gist of the Hyak cluster is this: you will SSH into the head node of the Hyak system, where you can do minor work or ask the system for an “interactive node” you can ssh directly to and work away. The “intended” way to use the cluster is to make a batch submit script and submit your job to the scheduler. Once you set up your SSH key relationship, you won’t need to use your DUO 2FA login. In a standard Hyak node on the batch system, all software is a “module,” so you’ll have to load the “R” or “Microsoft R open” (Formerly RevolutionR) module. Take a look at “Software Development Tools” here.

Additional information is available below:

- Wiki for Hyak Users

- Tutorials for getting started with version control, UNIX shell, R, etc

- Hyak R programming

- How to use the HYAK Job Scheduler

- Hyak Cluster Monitoring

- Getting started: Hyak 101 (hyak users wiki)

Which Hyak Queue should I use?

Have a lot of parallel workloads to run? Using the backfill queue offers the working potential of thousands of CPU cores.

NOTE: Each job in the backfill queue can only run for ~2 hours before being shut down, so divide your jobs up accordingly and/or use “checkpointing”!

As a member of the CSDE hyak allocation, you have access the few CSDE nodes that we own (currently 4 MOX as of 11/2019) as well as the backfill queue.

CSDE strongly advises you to develop and use BACKFILL QUEUE whenever possible.

Run your job on any of 3 queues using the following syntax:

- STF queue: (UW students only)* qsub -W group_list=hyak-stf runsim.sh

- CSDE queue: qsub -W group_list=hyak-stf runsim.sh

- Backfill queue: qsub -W group_list=bf runsim.sh

*If you are a currently enrolled UW student paying the Student Technology Fee, you should join the UW HPC Club. This will allow you to submit jobs to be run in the student node allocation

Hyak node purchases

For node options, rates, and other details please see UW-IT Service catalog page for Hyak

Note that the Arts and Sciences Dean’s office pays for the “Hotel Slots” that CSDE Hyak blades occupy, and so needs the Deans’ office approval in order to purchase a node.

Please contact CSDE Help for assistance with this process.

Current Node pricing as of 05/10/2021

Nodes are still 40 cores and the different memory configurations are listed below.

192GB nodes at $4,708.94 per node

386GB nodes at $5,513.57 per node

768GB nodes at $7,191.47 per node

Hyak Node Retirement

Blades are deployed for a minimum of three years. Blade deployments may only be extended if there is no demand for the slots they occupy. Because Hyak currently has lots of unoccupied slots, this has meant that nodes have continued to run beyond their 3-year minimum lifespan. As long as the nodes continue to operate, they have remained in the original owner’s queues. The IKT cluster has been running almost 10 years and will be retired/turned off in June 2020.

Click here for Hyak utilization data and here for the inventory.

Citation in Publications

Please remember to acknowledge Hyak in any media featuring results that Hyak helped generate. When citing Hyak, please use the following language:

“This work was facilitated through the use of advanced computational, storage, and networking infrastructure provided by the Hyak supercomputer system at the University of Washington.”

When you cite Hyak, please let us know by emailing help@uw.edu with “Hyak” as the first word in the subject along with a citation we can use in the body of the message. Likewise, please let us know of successful funding proposals and research collaborations to which Hyak contributed.

Hyak is a CSDE resource, so remember to cite CSDE as well! Click here for more information on acknowledging support from CSDE.

Project Folders

On the CSDE servers, you can apply for a Project Folder where you can store data in a location that is easily accessible when you are online.

Please be aware that to be granted use of a project folder, you must be working with a group of at least 2 members. Additionally, try to name your Project Folder as specifically and uniquely as possible, in order to avoid overlap with other existing project folders.

When a project folder is inactive for a long period of time, it will be moved into an inactive folder, where it will need a project folder member to notify csde_help@uw.edu to reactivate it. Should a folder be inactive for too long, it will be deleted altogether. Please endeavor to either move all necessary content off of the folder, or let us know to reactivate it in a timely manner to avoid loss of project data.

Additionally, please note that you should keep the data in the project folder within a reasonable size, so that the storage drive is not overloaded.

CSDE on GitHub

CSDE has an account on GitHub, an easy-to-use, web-based, software-development tool for software and documentation version control and sharing. CSDE Affiliates have used this public code repository for sharing code and data for classes, workshops, and collaborative efforts with the public and other universities. (You can learn more about GitHub here.) You may request access to the CSDE GitHub repository by emailing csde_help@uw.edu and including the email address you registered with GitHub (if you already have an account there).

Additional Information about using and securing repositories on github are listed below:

UW Github service (via UW-IT Service catalog entry)

Securing GitHub Repositories Part 1

Securing-GitHub-Repositories-Part-2

Anthro Data Science: Github for Anthropologists

Additional Hosting

CSDE also hosts grant-funded project servers, websites, and databases on its secure server. We host Windows Terminal Servers equipped for managing sensitive data and file-backup services. Affiliates can access these services with a yearly fee or ongoing grant funding support. Contact Matt Weatherford for further details.